Since the launch of ChatGPT, Generative Artificial Intelligence, or GenAI, has begun to revolutionize technology and business. With its impressive ability to create various types of content, from text and images to code, GenAI presents both exciting opportunities and significant challenges.

This doesn’t look like it’s stopping anytime soon either, Gartner estimates that by 2026, 75% of businesses will use GenAI to create synthetic customer data. A 2023 report by Mckinsey estimates that GenAI could add up to $4.4 trillion annually across 63 use cases globally.

However, as Elon Musk commented on an Interview with Rishi Sunak “AI will be a force for good most likely but the probability of it going bad is not zero percent so we need to mitigate the downside potential”.

As organizations look to integrate GenAI into their operations, understanding and managing the associated risks becomes essential to fully realize its potential while avoiding possible pitfalls. Despite the hype surrounding GenAI, many companies are still cautious. Concerns about privacy, security, copyright issues, and the risk of bias and discrimination are major factors driving this hesitance.

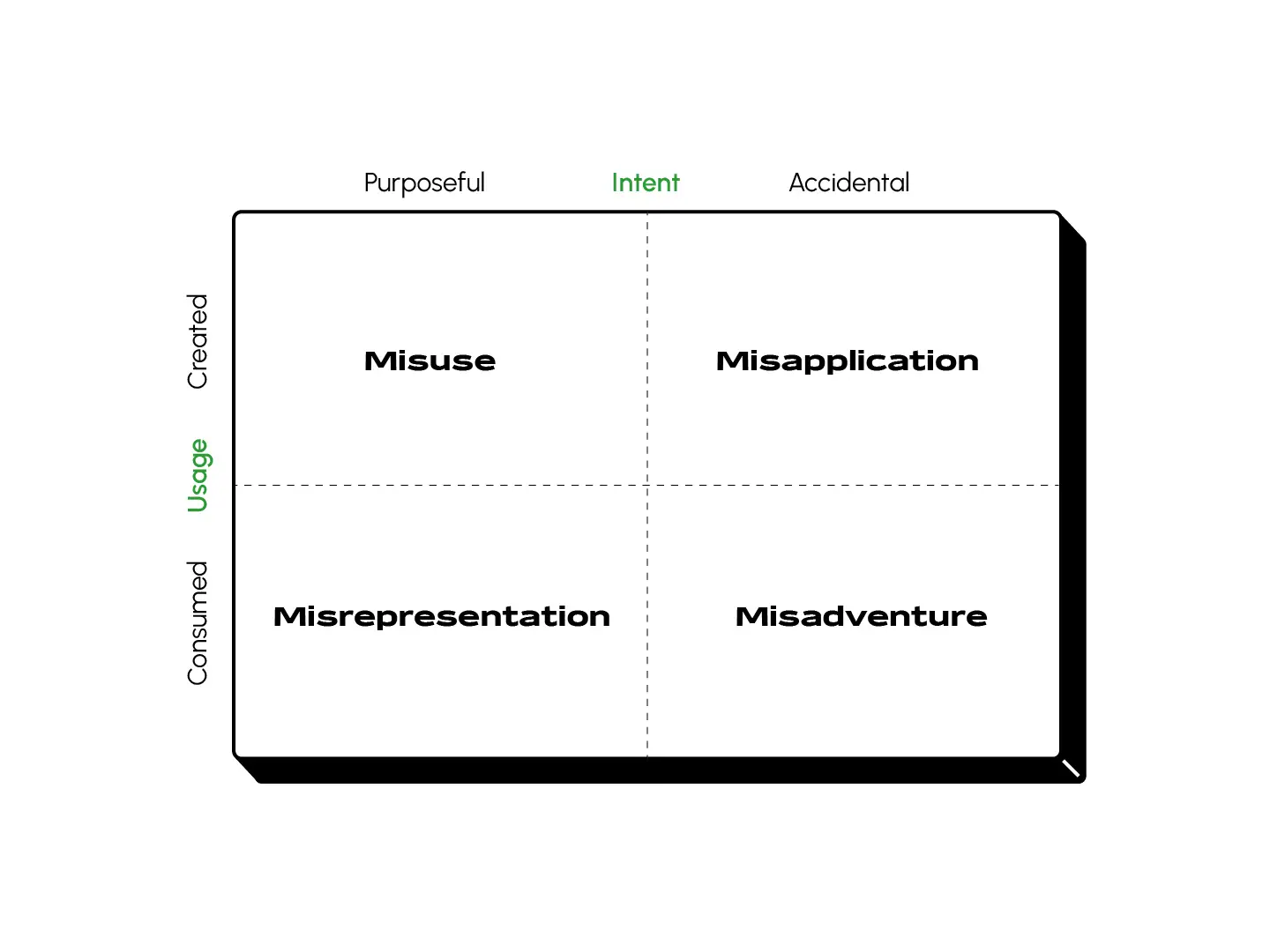

These concerns can be grouped into two main categories: intent and usage. Intent refers to whether the use of GenAI is deliberate or accidental, while usage differentiates between generating content with GenAI and consuming content created by others.

Different Types of Risks

To navigate the risks associated with GenAI, it’s helpful to analyze them through the lenses of intent and use. This approach distinguishes between accidental misapplications of GenAI and deliberate misuse and between creating content with GenAI and consuming content produced by others.

Here’s a framework by Harvard Business Review that highlights the main challenges of GenerativeAI:

- Misuse involves the unethical or illegal use of GenAI’s capabilities. As GenAI technology has advanced, it has unfortunately become a tool for malicious activities, like scams and misinformation. A glaring example is the rise of deepfakes—highly realistic but fake media—that have been used for social engineering, financial fraud, and even election manipulation. This highlights the need for strong measures to detect and prevent the misuse of GenAI.

- Misapplication occurs when GenAI tools are used incorrectly, leading to inaccuracies or unintended consequences. One well-known issue is “hallucination,” where GenAI produces incorrect information. As GenAI technology continues to evolve, managing these inaccuracies will become increasingly important.

- Misrepresentation happens when GenAI-generated content, created by third parties, is used or spread despite doubts about its authenticity. Deepfakes and other misleading content can spread quickly and cause reputational damage or misinformation. This underscores the need for vigilance in verifying the authenticity of GenAI content.

- Misadventure refers to the accidental consumption and dissemination of misleading or false GenAI content. A notable example is a deepfake image of an explosion at the Pentagon, which led to a temporary stock market dip. Such incidents illustrate the real-world impact of unintentionally spreading misleading information.

Strategies for Mitigating Generative AI Risks

To effectively manage the risks associated with GenAI, organizations can adopt several strategies:

- Organizations should establish clear AI principles that reflect their core values and ethics. This alignment ensures that GenAI is used responsibly and avoids causing personal or societal harm. By setting guidelines that emphasize transparency, fairness, and accountability, organizations can protect against misuse and misapplication.

- Watermarking GenAI-generated content can help maintain transparency by clearly indicating the source and authenticity of the output. Major AI providers are starting to adopt this practice, and organizations should follow their lead to help users differentiate between AI-generated and human-created content.

- Organizations should develop controlled GenAI environments tailored to their specific needs. This approach allows for content management and helps prevent the inclusion of sensitive information in GenAI systems. Fine-tuning large language models (LLMs) and incorporating privacy management tools can enhance control and reduce risk.

- Internal training programs are essential for educating employees about the responsible use of GenAI. Raising awareness about potential biases, transparency issues, and regulatory concerns ensures that teams are well-prepared to handle GenAI content appropriately.

- Establishing robust systems for validating AI output and cross-checking content for accuracy is crucial. Automated tools and manual review processes can help detect inaccuracies and verify the authenticity of GenAI-generated content. Implementing a “four-eye” control mechanism, where content is reviewed by multiple individuals, can further improve quality control.

- Even with the best precautions, some risks may emerge and require quick action. Organizations should develop comprehensive damage mitigation plans, drawing from cybersecurity incident response practices. An internal task force should be ready to manage and communicate risks effectively, ensuring transparency about governance and risk management practices.

Conclusion

Generative AI is a transformative technology with the potential to revolutionize various aspects of our lives. However, its rapid advancements also bring forth a spectrum of risks, from malicious misuse and unintentional errors to the spread of misinformation and unintended consequences.

To harness the full potential of GenAI while minimizing its risks, organizations must prioritize ethical and responsible development. This involves establishing clear AI principles, implementing robust security measures, and fostering transparency through practices like watermarking. Equally important is educating employees and the public about the potential pitfalls of GenAI and developing strategies to mitigate its negative impacts.

As we move forward into an era where AI-generated content becomes increasingly prevalent, striking the right balance between innovation and responsibility will be crucial. Laws and governance will slowly catch up but it is up to us to ensure that this powerful technology serves as a force for good, driving progress while safeguarding our values and security. The future of AI is in our hands – let's shape it wisely.

Written by Prameet Manraj

Prameet is a Team Liaison at Pvotal Technologies. Passionate about all things digital since childhood, he likes to review the good and bad side of new technology.

Sources:

- https://www.gartner.com/en/documents/4785331

- https://hbr.org/2023/11/navigating-the-new-risks-and-regulatory-challenges-of-genai

- https://www.youtube.com/watch?v=AjdVlmBjRCA

- https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#introduction

- https://www.gartner.com/en/articles/3-bold-and-actionable-predictions-for-the-future-of-genai